Stress-Testing DracoBFT: What We Learned Processing $48.6B on Testnet

We ran our testnet with zero rate limits to validate DracoBFT under adversarial conditions. We didn’t simulate chaos, we invited it. Here's what we learned.

BTW You can read this on X here:

The Experiment

DracoBFT is our consensus layer stake-weighted leader selection, pipelined block production, two-chain deterministic finality & partial synchrony with n = 3f + 1 Byzantine tolerance.

Target specs: We had decided on 150ms finality with a 75ms blocktime, we wanted to identify max TPS in a prod environment. Internally on our devnet we had tried to stress the system and roughly achieved ~300k-400k TPS.

But specs on paper mean nothing. We needed to see what happens when real users and real adversaries interact with the system at scale.

Also TPS for Hotstuff is dependent on the kind of transactions being included in that block, as not every transaction type is the same.

So we launched on testnet with:

Zero rate limits

Open faucet (10k USDC/account/day)

No API documentation (users had to reverse-engineer from UI)

Full production architecture

We wanted to break things. We succeeded.

Like

says:

you have to explore the limits to find where the tolerances are

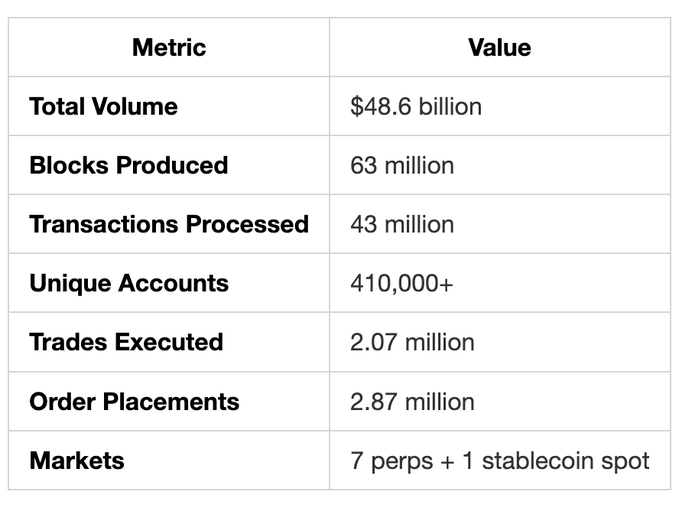

The Numbers

Over several weeks of testnet operation:

For context: 400k+ users with active positions and non-zero balances exceeds the active trader count of most DeFi perp DEXs in production.

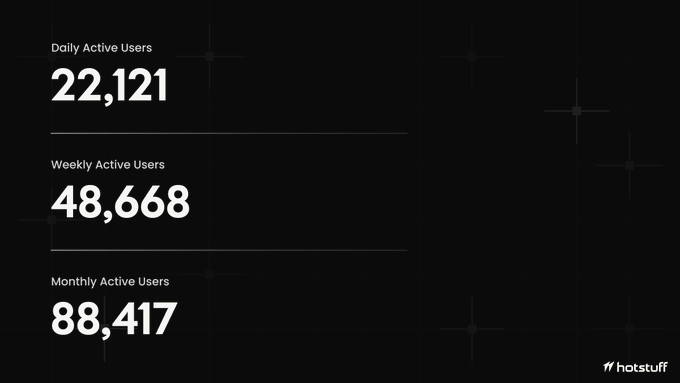

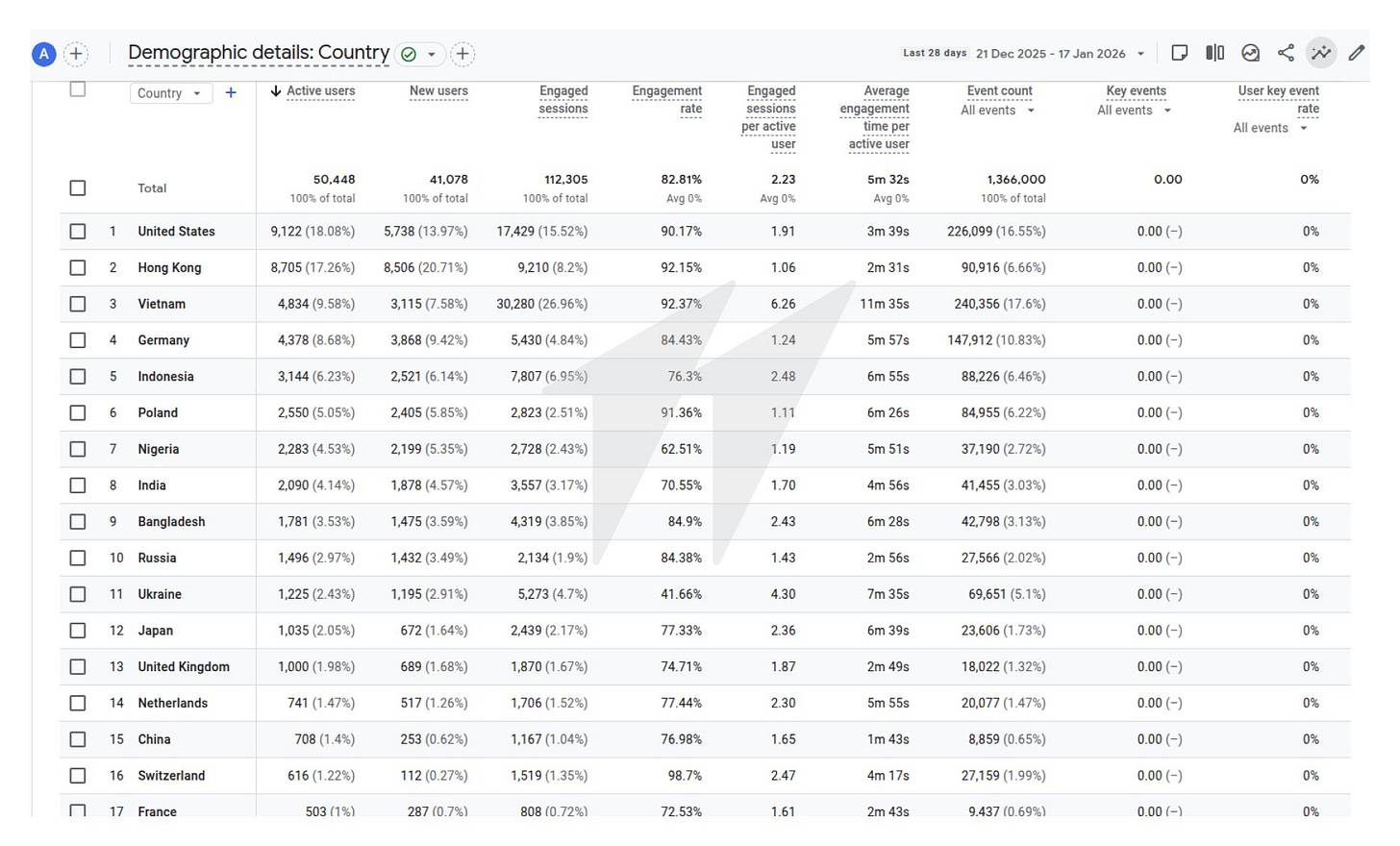

User Activity & Global Distribution

Users came from everywhere United States, Hong Kong, Vietnam, Germany, Indonesia, Poland, Nigeria, EU, India, Russia, and more. 50,000+ active users across 143 countries across the world with over 1.3 million events tracked. High engagement rates (82%+) and average session times of 5+ minutes indicated real usage, not just drive-by curiosity.

What We Were Actually Testing

DracoBFT introduces architectural innovations that needed validation under real load:

Chained Change-Log Commitments (CLH): Instead of recomputing full Merkle state roots every block, we hash only modified keys and chain forward. For high-frequency financial workloads, this is the difference between theoretical throughput and actual latency at scale.

Side-loops architecture: Auxiliary execution domains where validators perform real-world operations without blocking consensus. This separation was critical when auxiliary services failed consensus kept running.

Validator service model: Validators as infrastructure providers, not just consensus participants.

The testnet validated a core thesis: the consensus layer can remain stable even when everything around it is on fire.

Week 1: Initial Validation (Dec 5-15)

Announced December 5th. 80,000 trades processed within 12 hours.

At hour 10, users identified an HLV vault share display bug. Fix deployed in under an hour. First hard fork executed at hour 12 , our first-ever network upgrade.

Community-reported issues:

2 major (1 in auxiliary services, 1 in HLV)

15 medium

30 low/informational

Only 2 touched validator node codebase

Dec 6-16: ~50k trades daily, <2,500 active traders. Organic activity with human trading patterns. Team used this window to ship fixes efficiently.

Takeaway: Core consensus was solid from day one.

Week 2: Adversarial Scaling (Dec 15+)

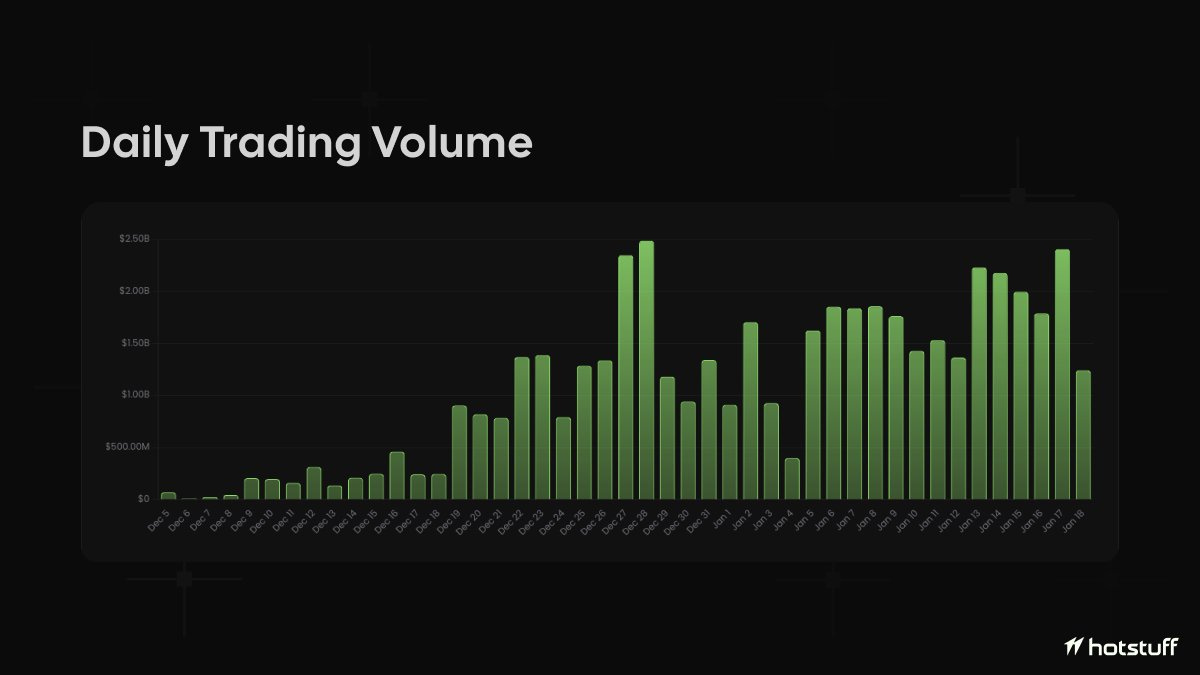

Post-December 15th, volumes doubled while active trader count barely moved. >$500M daily with only ~1,000 additional traders.

Users discovered faucet arbitrage: claim on Account A → transfer to Account B → repeat. Clever exploitation of our intentionally permissive design.

Then: 50,000-75,000 new accounts funded daily via inorganic bot activity. Users had reverse-engineered our API purely from UI network requests we hadn’t published documentation. (Similar to how Lighter.xyz was doing >$1B daily on mainnet without public API docs.) These were traced back to ips in Hong Kong and Germany.

December 19th: first 500M+day.

December 28th: 500M+day.

December 28th: 2.5B volume, 120k+ trades.

The system was processing more activity than most production DEXs.

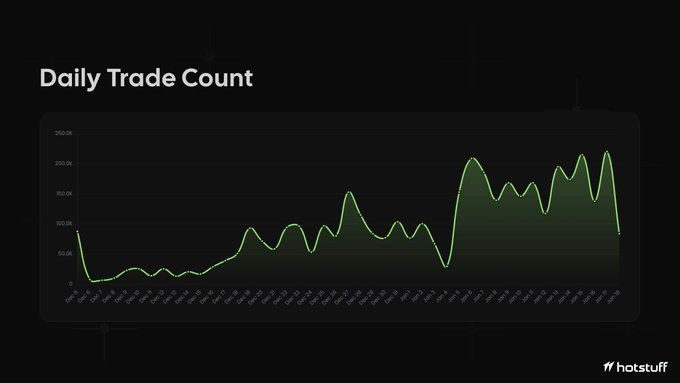

Trading Activity Over Time

The chart tells the story: organic growth in early December, then exponential scaling as automated systems discovered the API. Peak days saw 200k+ trades processed. The validator nodes handled it all without consensus failures.

What Broke (And What Didn’t)

What stayed solid:

Validator consensus layer

Block production (63M blocks, zero safety violations or liveness breaks, no consesnsys halts)

Transaction ordering and execution

DracoBFT core protocol

What failed:

Auxiliary services aggregating orderbook state

Observability infrastructure

Rate limiting (intentionally absent)

The separation was exactly as designed. DracoBFT’s architecture isolates consensus from auxiliary services. When the orderbook aggregation service went down, validators kept producing blocks. User transactions continued processing. The core remained stable.

This is the whole point. Side-loops and auxiliary services can fail without taking down consensus. The failure mode was graceful degradation, not system collapse.

The biggest bottleneck in architecture wasn’t even the nodes but an aux service which recreated our orderbook from orderbook diffs(amongst other things) for realtime UI and API displays. This interestingly caused our UI to display negative slippages due to phantom orders which didn’t exist in the validator states at all.

The Observability Problem

State machines are difficult to debug at DracoBFT speeds.

Traditional observability failed:

Sentry: Couldn’t handle throughput

Loki + Grafana: 3+ minute query times, unusable for debugging

Disk storage: Impractical at log volumes we were generating

We needed granular data for debugging but conventional infrastructure couldn’t cope.

Solution: Custom logging and monitoring solution (BeaconX). Queries dropped from 3+ minutes to under 1 second.

This worked until log volume scaled with unique addresses. Memory spiked across nodes.

Lesson: Purpose-built L1s require purpose-built observability. Standard tooling assumes standard throughput.

The Economic Discovery

The most important finding wasn’t technical it was economic.

The real rate limiter in production is money.

Without economic stakes, users interacted with our system in ways no rational actor ever would:

Faucet farming across thousands of accounts

Stress patterns that constitute effective DoS attacks

Trading behaviors that would be economically irrational with real capital

The testnet scaled beyond mainnet expectations because testnet removes the natural economic friction that regulates production systems.

This is why battle-tested teams implement testnet faucet restrictions. We learned this empirically so we could build the right architecture for mainnet.

Interesting edge case: USDT/USDC spot prices deviated significantly due to absence of real arbitrage. Users claimed USDT, immediately dumped for USDC (our settlement currency). Without peg arbitrage opportunities, markets behave irrationally.

Technical Learnings

1. Formal Specification Pays Dividends

We used Quint by Informal Systems for formal specification. Writing a formal spec and enforcing constraints helped us identify event loop flow issues before they hit production.

If you’re building consensus mechanisms, formal specification isn’t optional. Shoutout to the team at @informalinc for building tools that make this tractable.

2. TEE Debugging is 10x Harder

Internal experiments with confidential TEEs confirmed this. Debugging inside trusted execution environments introduces significant friction. We’ve mapped architectural solutions and will ship this as Hotstuff exits beta.

3. Architecture Separation Works

When auxiliary services failed, consensus didn’t. The side-loop architecture validated its core premise: real-world operations shouldn’t block consensus.

4. Testnet ≠ Mainnet Behavior

This seems obvious but isn’t. Testnet stress testing revealed edge cases that rational economic actors would never trigger. That’s exactly what we needed to harden the system.

Final Sprint

New Year’s Eve. Auxiliary service down again. The team shipped patches at midnight while others celebrated.

After an intensive sprint: permanent fixes deployed, supporting infrastructure scaled.

The good news: Validator node software remained stable throughout. Core DracoBFT consensus never failed. We added markets, shipped UI fixes, and grew our Discord to 10,000+ users.

Why This Matters

Building a purpose-built L1 from scratch is fundamentally different from forking Geth or Reth:

No inherited battle-testing

Every component validated under adversarial conditions

No safety nets

DracoBFT isn’t a fork. It’s ground-up consensus design with innovations that required stress-testing we couldn’t simulate.

For builders working on novel consensus architectures: the problems are hard. Formal specification, custom observability, and adversarial testnet conditions are non-negotiable.

Acknowledgments

Building consensus from scratch requires standing on the shoulders of giants.

Our Team @hotstuff_labs: We shipped fixes at midnight on New Year’s Eve, Christmas eve. Debugged state machines at 75ms block times. Built custom observability from scratch. That’s the caliber of people building this.

Informal Systems: Quint made formal specification tractable. If you’re building BFT systems, their tooling is essential. Extremely grateful to @zarinjo & @bugarela who provided detailed feedback on our spec. Thanks to their feedback, we’re looking forward to rewriting our original quint spec in choreo. no TLA+.

Commonware: the fact that Simplex ships with an embedded VRF is truly amazing. a masterclass by the commonware folks @_patrickogrady Although we’re partial to golang the rust vibe is strong

@hyperliquidx: We run a Hyperliquid testnet non-validating node and observed similar scaling patterns and challenges. Massive respect to the team including @chameleon_jeff @Iliensinc @xulian_hl for navigating these problems at scale. As far as we know, they’re the only other production-grade chain operating with a similar architecture.

For Builders

If you’re working on novel consensus architectures especially in the HotStuff family, BFT variants, or high-performance L1s we’d love to connect.

The problems are hard. The community of people working on them seriously is small.

Reach out: @hotstuff_labs

What’s Next

Testnet stress testing is complete. DracoBFT is battle-hardened. Infrastructure has been scaled. Edge cases resolved.

Mainnet is next.